Image courtesy of Bangkok City Lab

แนวคิดโครงการ

Project AIRA (AI Research Assistant for Urban Field Photos) เป็นโครงการทดลองต้นแบบ (prototype) ที่พัฒนาโดยหน่วย Future Curiosity Lab ภายใต้ ศูนย์การทดลองเมืองกรุงเทพมหานคร (Bangkok City Lab) เพื่อสำรวจการใช้เทคโนโลยีปัญญาประดิษฐ์ (AI) ในการวิจัยเมือง โดยเฉพาะการวิเคราะห์ภาพถ่ายภาคสนามจากพื้นที่สาธารณะ

ระบบนี้ออกแบบให้ AI ช่วยทำงานที่ต้องทำซ้ำ เช่น การตรวจจับพฤติกรรมทางสังคม ความหนาแน่นของผู้คน หรือองค์ประกอบต่าง ๆ ในพื้นที่ จากนั้นแปลงข้อมูลเป็นคำบรรยายและข้อมูลเชิงโครงสร้าง (JSON format) เช่น จำนวนคน ยานพาหนะ ต้นไม้ หรือบรรยากาศโดยรวม ข้อมูลเหล่านี้ถูกจัดเก็บบน คลาวด์ (cloud) และสรุปเป็นแนวโน้มผ่าน แดชบอร์ด (dashboard) เพื่อแสดงภาพรวมและรูปแบบที่เกิดขึ้น

แม้ AI จะทำงานซ้ำได้อย่างมีประสิทธิภาพ และช่วยให้เห็นสิ่งที่อาจถูกมองข้าม แต่การวิเคราะห์เชิงลึกเพื่อเข้าใจความหมายและบริบทของข้อมูล ยังคงเป็นหน้าที่ของผู้เชี่ยวชาญ เป้าหมายของ Project AIRA คือการใช้เทคโนโลยีร่วมสมัยเพื่อเสริมศักยภาพนักวิจัย สนับสนุนการวิเคราะห์เชิงลึก และช่วยออกแบบนโยบายหรือบริการเมืองบนฐานคิดและวิสัยทัศน์ที่มีข้อมูลรองรับ

About Project:

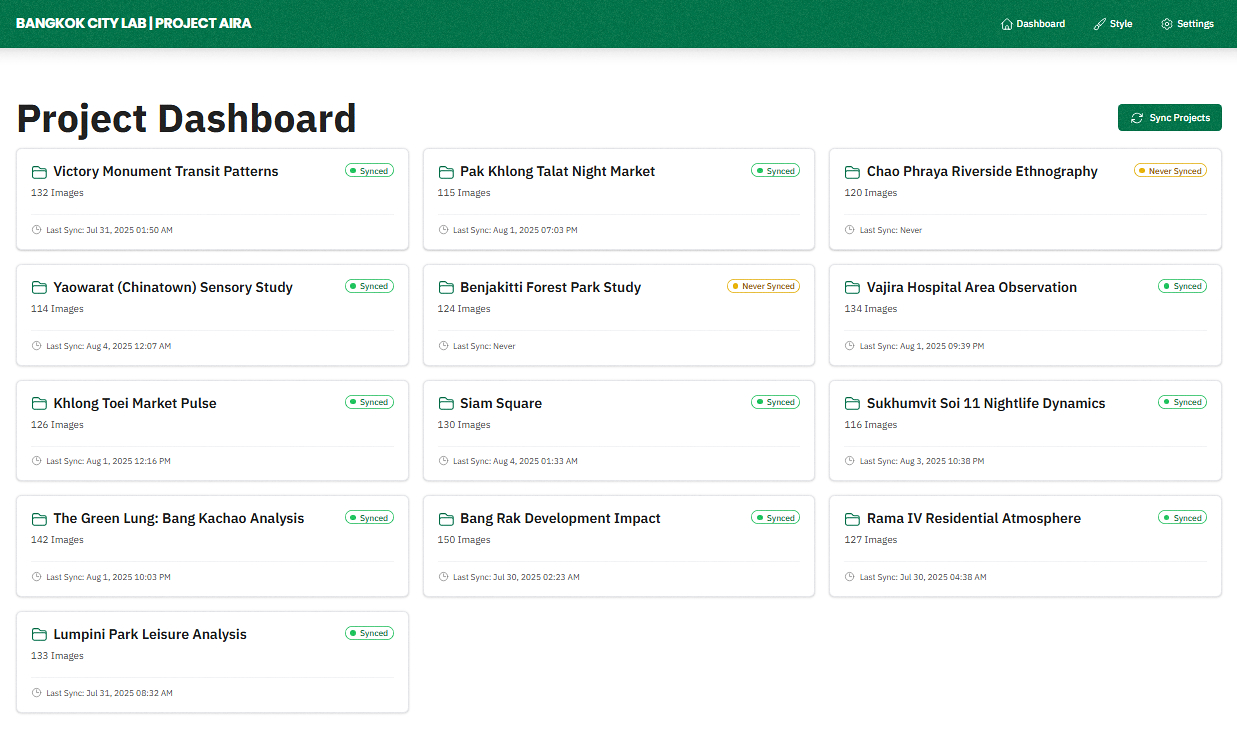

Project AIRA (AI Research Assistant for Urban Field Photos) is both an experimental research project and an early-stage prototype developed by Future Curiosity Lab, a sub-unit of Bangkok City Lab (ศูนย์การทดลองเมืองกรุงเทพมหานคร). It is part of our ongoing research themes: Beyond Human Intelligence, The Future of Labour, and The Cluster in the City. These themes explore how emerging technologies can help us observe, interpret, and make sense of urban behaviors that are often overlooked.

AIRA functions as an AI-powered assistant for researchers. Originally developed for internal use at Bangkok City Lab, the prototype helps analyze large sets of field photos capturing street life, public spaces, and everyday moments in Bangkok. By automating repetitive tasks and surfacing subtle patterns across images, AIRA supports deeper analysis of spatial usage, social behavior, and cultural context.

Project Objectives:

- To improve the efficiency and scalability of urban fieldwork by enabling rapid processing and analysis of large volumes of field photographs from diverse public spaces.

- To explore the potential of AI in detecting subtle spatial patterns and social behaviors—such as mood, use intensity, and informal activities—that are often overlooked in manual observation.

- To minimize repetitive and mechanical tasks so that researchers can focus on deeper interpretive analysis, thematic synthesis, and actionable insights.

- To prototype AI as both a research tool and collaborator, testing how machine assistance can extend ethnographic and spatial research in meaningful ways.

- To experiment internally with tool-building as part of our methodology, developing systems that are not off-the-shelf but are designed to align with the specific needs, questions, and rhythms of our research practice.

Research Context:

This project explores how AI can support urban research by helping us observe and understand everyday life in public spaces.

Our starting point is a practical challenge: traditional field observation is useful but limited. It requires time, staffing, and sustained attention, and it cannot easily scale across multiple locations or long periods. AIRA was created to address this gap. It enables researchers to analyze large sets of field photos, making it possible to observe many sites at once and over time.

The project integrates multiple research interests. It connects urban and spatial studies, behavioral and social observation, and AI-supported analysis. Methodologically, it combines passive data collection with scalable observation to extend a researcher’s reach across time and space. AIRA is not intended to replace human interpretation but to support it by identifying patterns, making comparisons, and posing new questions.

Methodology

The source data for AIRA comes from regular site visits conducted by the research team at Bangkok City Lab. During these visits, researchers take photographs of public spaces in various parts of the city. These images are uploaded to a shared cloud folder, organized by location. They document a range of everyday urban conditions—people, vehicles, animals, greenery, and social activity.

Once uploaded, the images are processed through a multi-step AI analysis pipeline:

First, a multi-modal AI model examines each photo and generates a narrative description, focusing on atmosphere, behaviors, and environmental features. Then, the outputs are structured into a standard data format (JSON), including variables such as the number of people, vehicles, animals, bicycles, greenery, visible mood, and the time of day. Finally, a second AI agent reviews the full dataset to identify patterns, highlight recurring elements, and produce thematic summaries that support further interpretation.

This process allows researchers to compare conditions across different areas, monitor changes over time, and explore urban dynamics at a larger scale than manual observation typically allows.

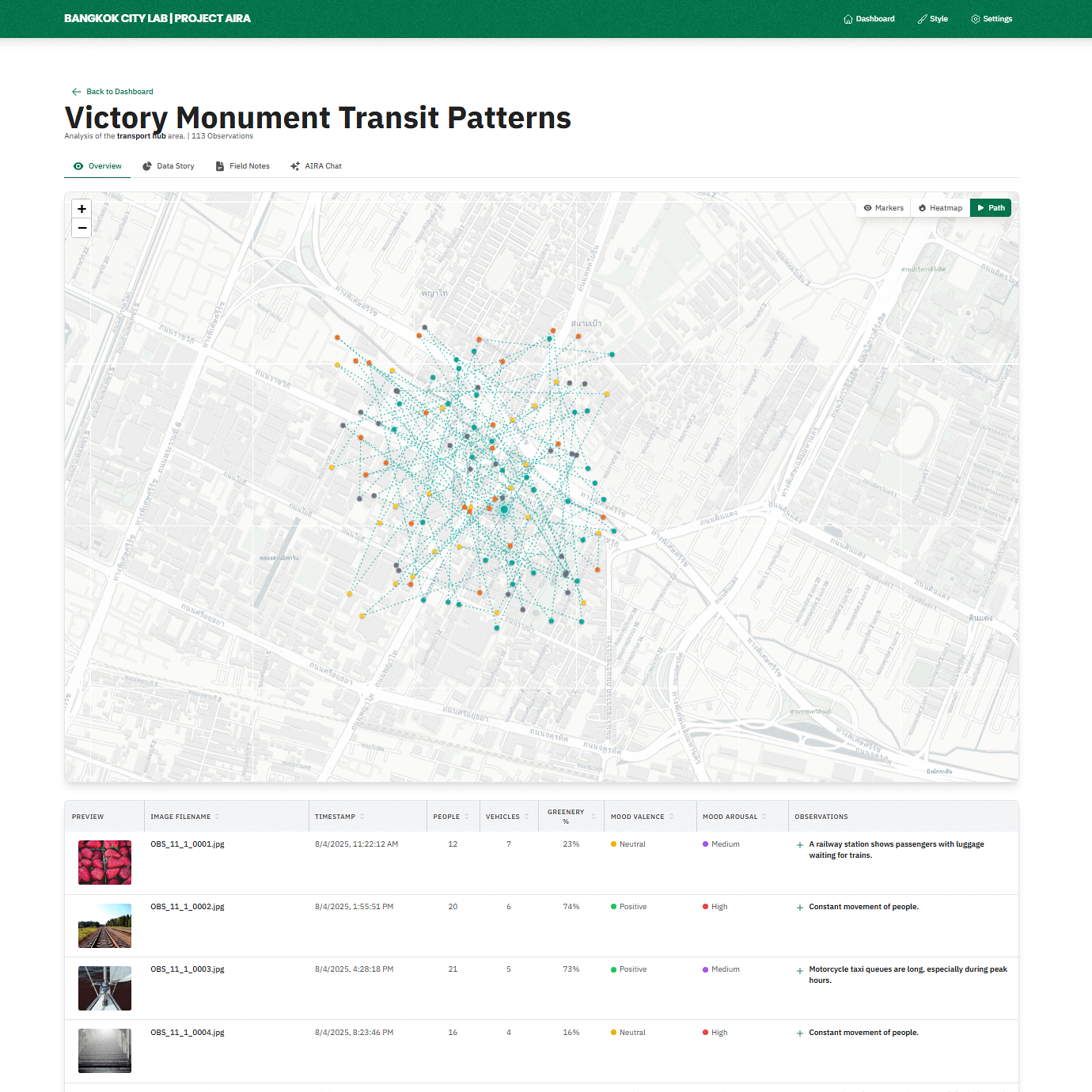

AIRA Dashboard

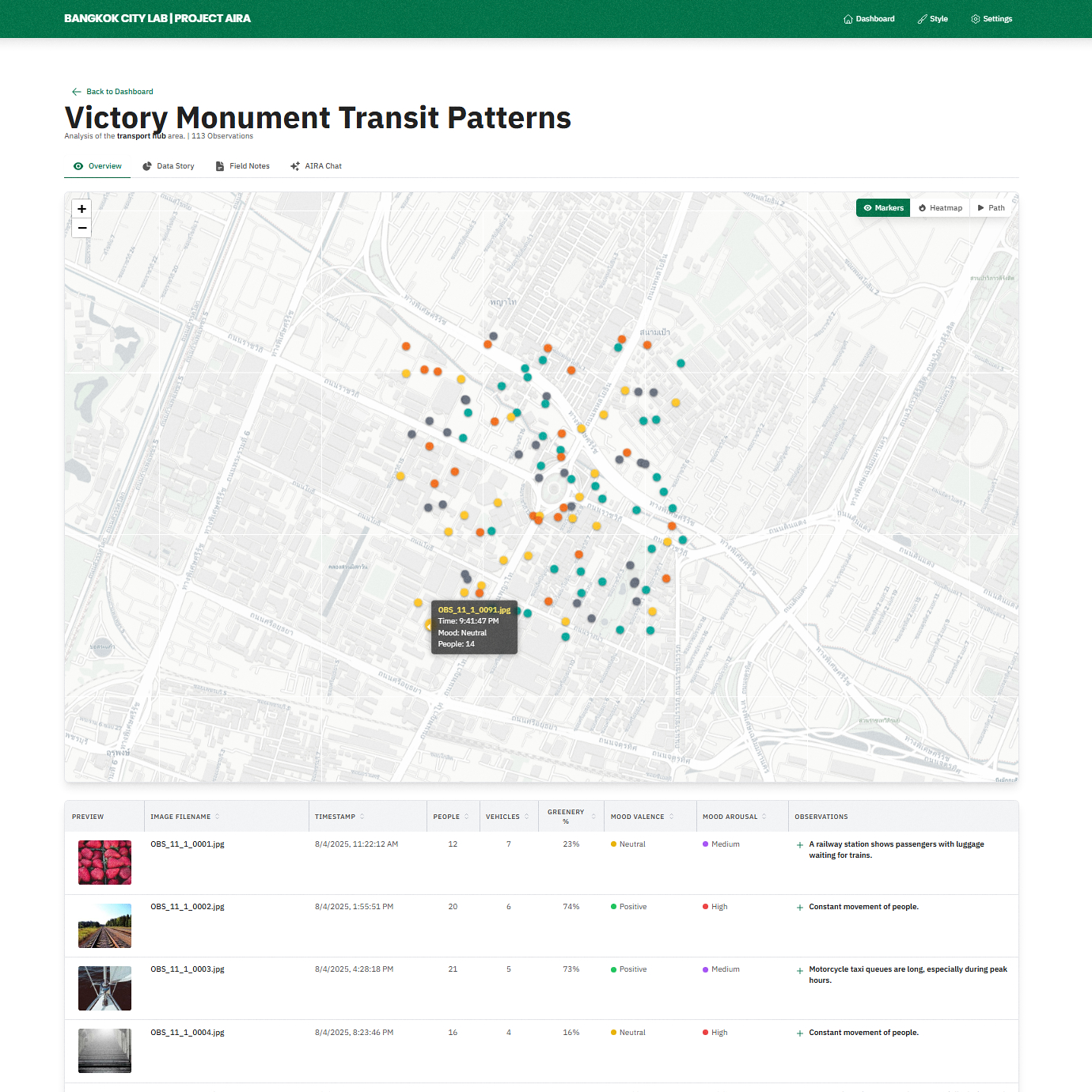

The analysis results are displayed in a clear, interactive dashboard designed to support exploration and insight. The dashboard includes the following pages:

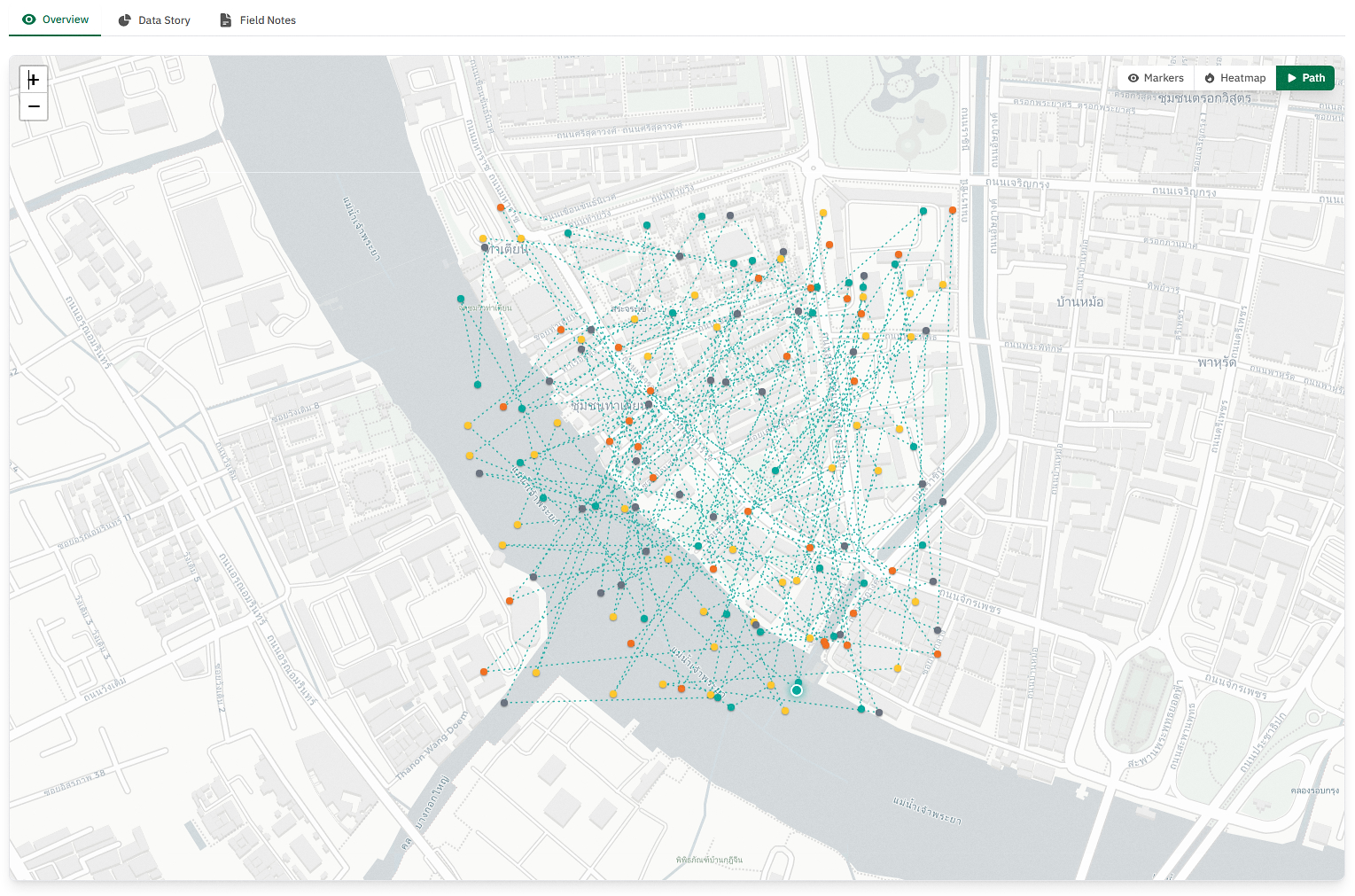

Map Overview:

Displays the locations where photos were taken across the city. Users can view spatial patterns through heatmaps and time-based animations. Each photo and its analysis are listed below the map, allowing side-by-side comparison between the image and the AI-generated data.

Map Overview

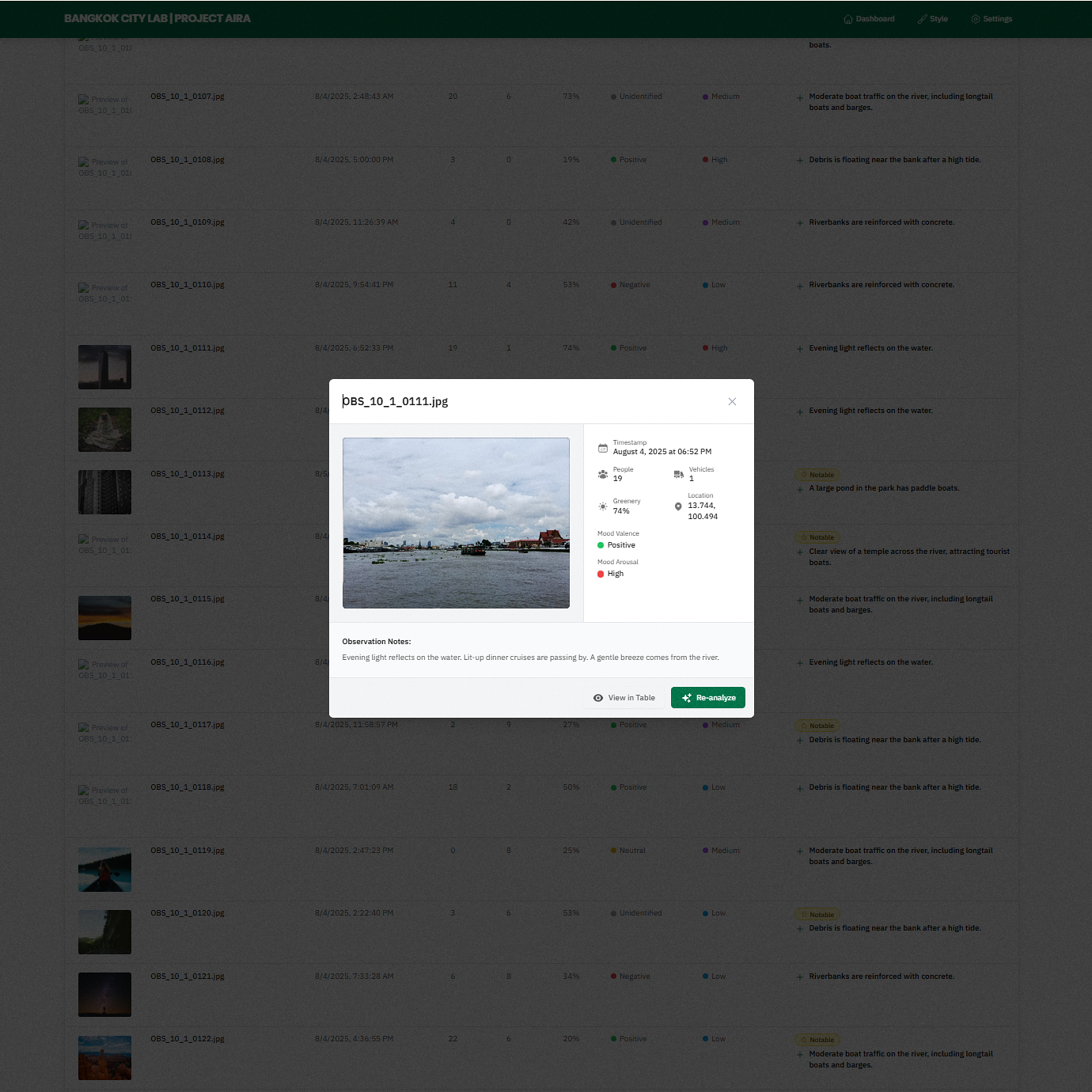

Image-based observation

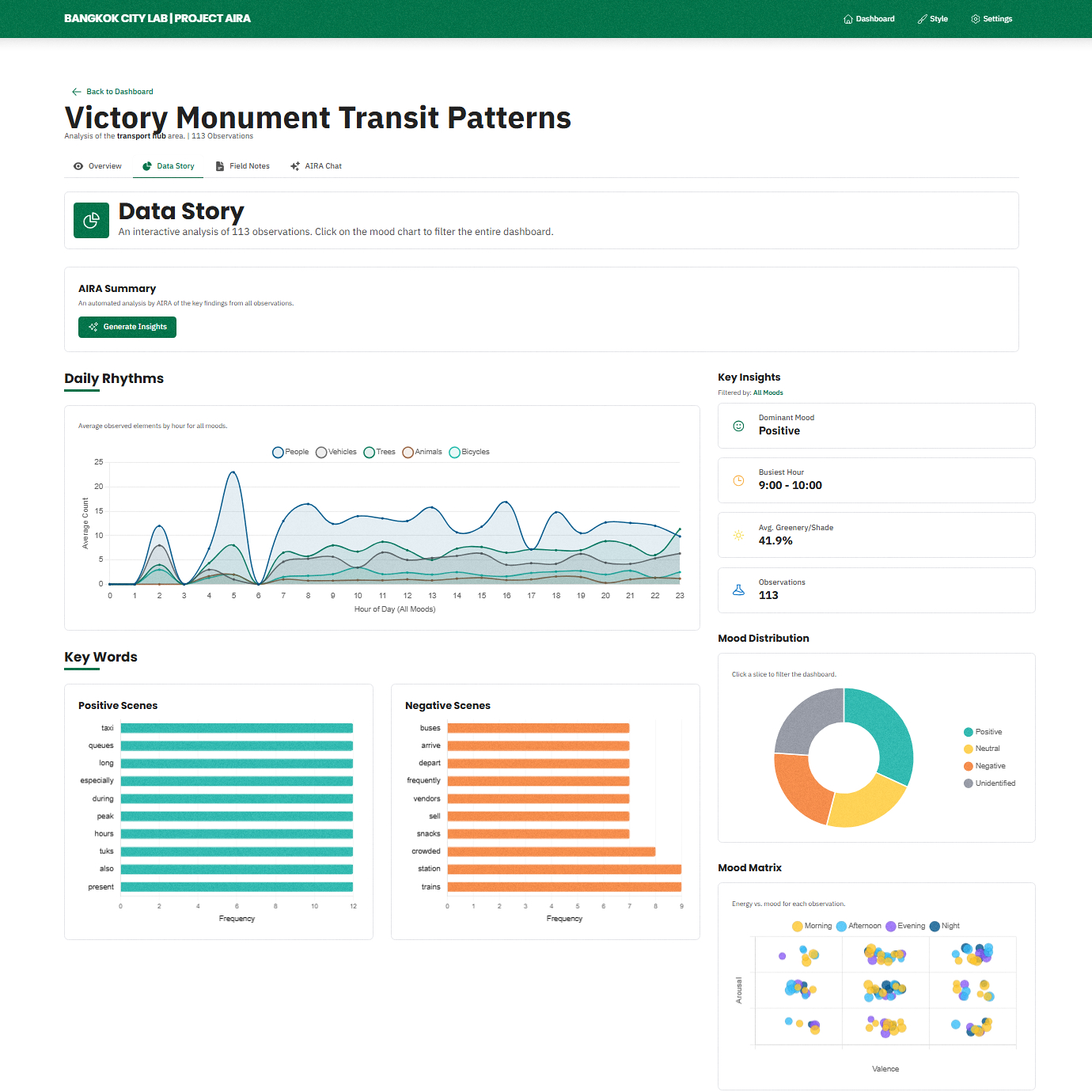

Data Story:

Presents key metrics—such as mood distribution, activity levels, and greenery—through simple charts and visual summaries. This view supports pattern recognition and high-level comparisons.

Data Story

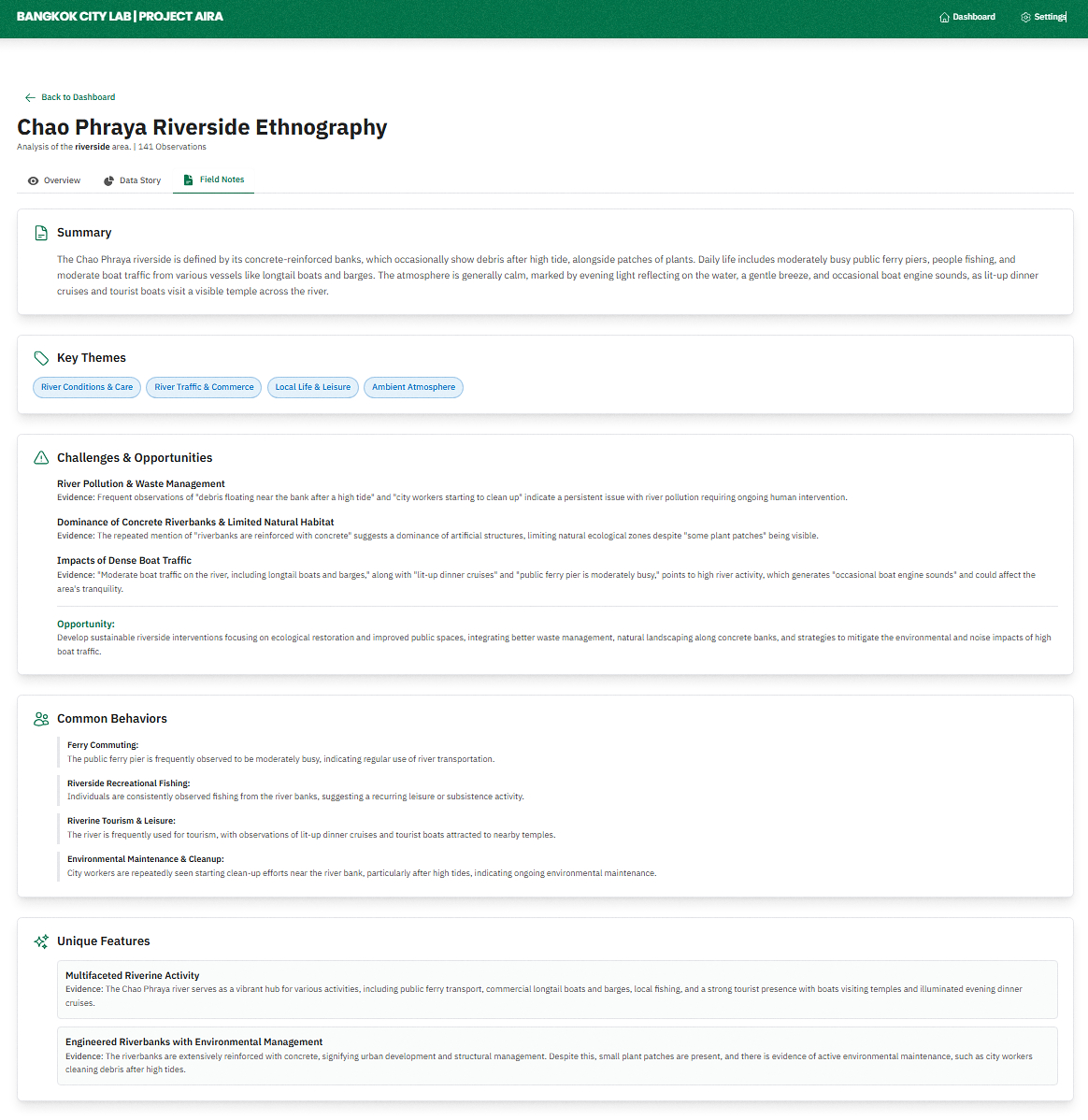

Field Notes:

Offers narrative insights generated by AI, summarizing patterns, anomalies, and recurring behaviors across the dataset. This section provides context and supports interpretation.

Field Notes

Future Directions:

Project AIRA introduces a starting point for visual urban research using AI. The current prototype is used internally with researcher-collected field photos, but there are several directions that could be explored in future phases, depending on available resources, partnerships, and technical development.

One area of exploration is adapting AIRA to process selected CCTV or public camera feeds. While this would involve a different mode of data collection, it could complement researcher-led photography by enabling longer-term and continuous observation.

With expanded datasets, AIRA could also support correlational studies—for example, comparing greenery with perceived mood, or analyzing how time of day affects the use of public space. In addition, it may assist in evaluating urban interventions by comparing data collected before and after physical or policy changes.

Looking further ahead, the system could contribute to predictive analysis or be integrated with other urban datasets—such as air quality, temperature, or noise levels—to support more holistic approaches to understanding urban environments.

Project Team & Acknowledgements:

This project is a collaborative effort within Bangkok City Lab.

- Project Lead: Bangkok City Lab

- Concept, Design & Development: Future Curiosity Lab